Last week, we began a new round of professional development. One of our School Improvement Goals is to increase the number of students proficient in evidence and elaboration in their writing. In our most recent round of ILEARN testing (the test used in our state to check student proficiency in math and ELA in grades 3-8), only slightly more than 17% showed proficiency in this area. Our working theory is that by helping our students expand their sentences, add more details, and ensure that those details stay on topic, we will also see improvement in other areas of the written portion of the test.

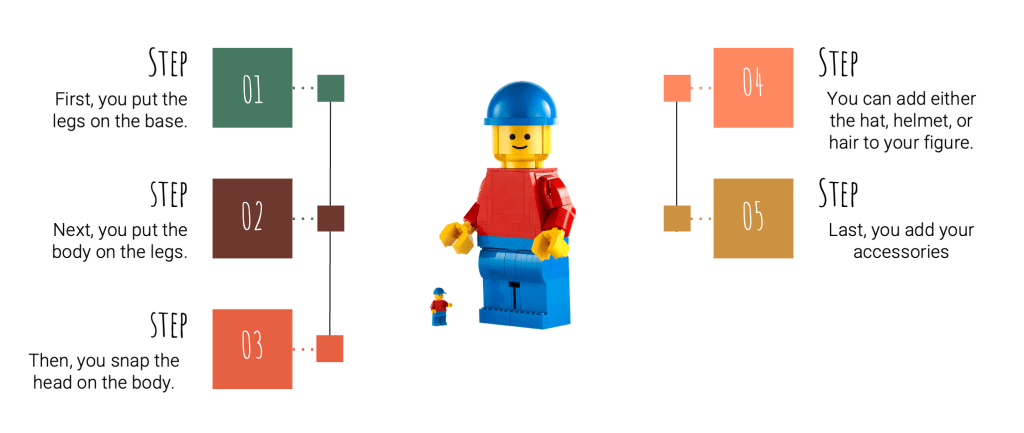

But I also know that when I bring together a group of teachers ranging from kindergarten to fourth grade, some may have difficulty connecting to the data they see on the screen because “we don’t teach those standards.” To help get thinking about each person’s critical role in moving our students towards proficiency, we did an activity with one of my all-time favorite toys, LEGO! Each group received a box with a Minifigure inside, and then we put the following directions on the screen:

We asked one person to follow a step in the directions, revealing them one at a time and then passing the Minifigure to the next person. Once all tables had completed their Minifigures, we displayed the question: Which step could you skip and still have a completed Minifigure? The team reached a consensus that there were no steps we could forget and that we still had completed Minifigures.

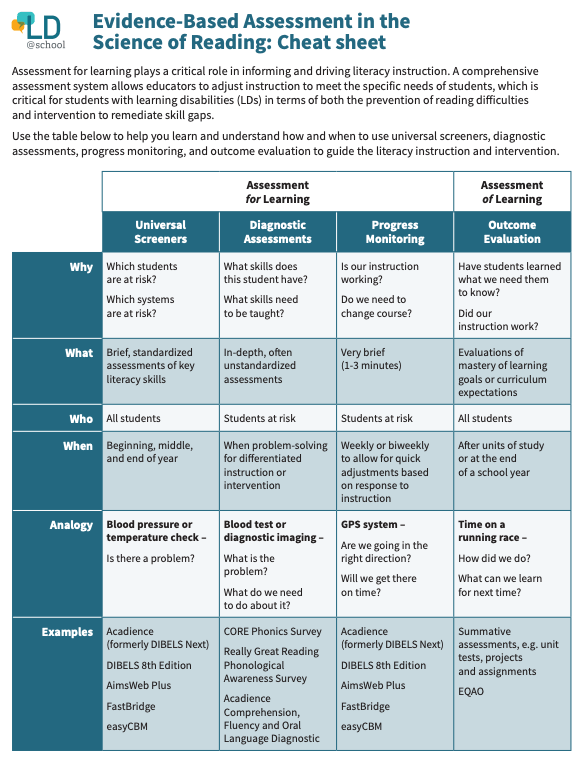

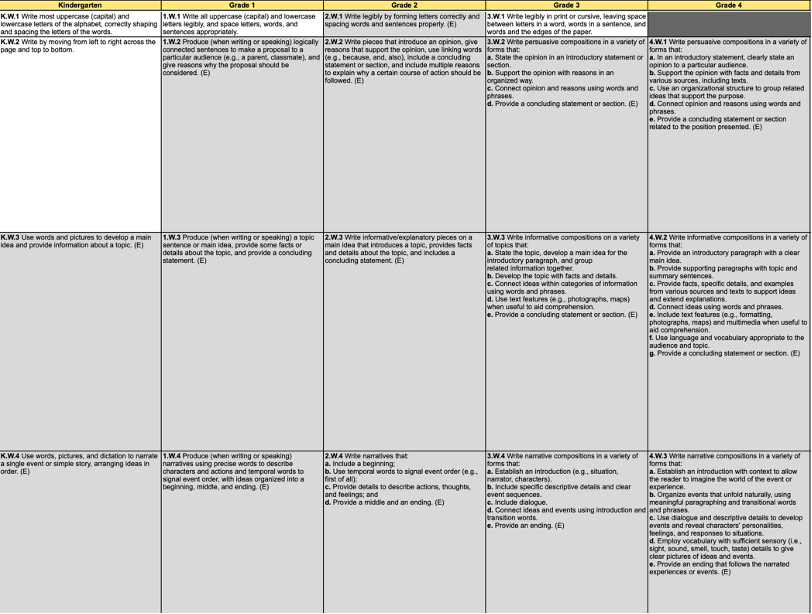

So then, we showed this:

I know the font is tiny, so don’t feel you need to zoom in. This is a vertical articulation guide for the essential writing standards for grades K-4. At first glance, without even digging into what they say, you might notice that they become more detailed as the grades advance. When we looked at this with our staff, several noted that they built upon one another. Suppose you were to look at the third row related to writing informative pieces—every grade level talks about being on a topic or having a central idea, and every grade level has something about including details, but the requirements and expectations of each grade level become more detailed.

The analogy we made as we discussed this is that we think of the writing process as a stairstep. Each grade level has a target level of proficiency. If one grade level does not hit their proficiency level, the work of the following grades becomes more challenging because they have to play “catch-up” with their students.

The State of Indiana has provided rubrics that are written based on the academic standards, and they are broken down into the different categories that students are assessed on during the writing portion of the ILEARN assessment. They include three focus areas – organization, evidence and elaboration, and conventions. So, we started by looking at the rubric section based on evidence and elaboration (our goal area). Unfortunately, since the ILEARN rubric only includes grades 3-8, we didn’t have a clear rubric for grades K-2 in this area. So, our leadership team had done some prework. We dug into the standards and rubrics from grades three & four and then walked the rubrics back, referencing what was included in the standards in each grade level, to create a simple bullet-pointed rubric for all grades K-4.

What we came up with was something that looked like this:

Next, we sent each grade level team to dig into their standards, the academic frameworks put together by the state, and the prework our lead team had done. We then took time to define success criteria. We asked ourselves, “What should my students’ writing look like to show they have met proficiency in the areas we’ve identified?” To ensure we clearly understood what proficient writing should look like, we utilized the Vermont Writing Collaborative writing samples, which had been scored based on common core standards. These standards are very close to the ones that we use in Indiana. My favorite thing about these samples is that when you look at the scored samples, there is information on the page about why they fall into the category they did on the rubric. Then, the person who scored it wrote a short section called “Final Thoughts,” which helped us better understand what to look for to show proficiency on the standard.

Moving forward, we will use these rubrics and success criteria to identify where our students currently fall in evidence and evaluation on a cold write (a piece of writing that our students have not had any direct instruction or support to write) and then plan instructional strategies that will support their needs. It was important to us to look at cold writes instead of a piece that the students may have been working on as part of a current unit because that will give us an idea of what our students can do entirely on their own without any direct teaching to support the writing process.

Much like building a LEGO, the writing process is a step-by-step learning process so that students may grow in the vertical articulation of the standard. No step can be missed for our students to get to proficiency. The scores on the ILEARN assessment are often tied to the classroom or grade level that took the assessment. I want our teachers to be fully aware that without the foundational steps that must happen in kindergarten, first grade, and second grade, our students will never get to proficiency in grades three & four. Those scores represent our work to build a student as a writer. If you skip a page in your LEGO instructions, you will encounter problems later in the build. At the same time, if we miss a step in building proficient writers, our students will struggle as they age.

What are your thoughts? Have you, like me, ever been leading a professional development and felt like some weren’t fully engaged because the data “wasn’t from their grade level?” Have you ever been the one who disengaged? How might you think differently about your role moving forward? Let us know your thoughts in the comments below!